How Do AI Overviews Work? What Content Teams Need to Know

Are you being tasked with optimizing for AI Overviews? Have you had any training to help you to understand how they actually work?

We're witnessing a massive shift in search behavior. AI Overviews are changing the way people access content - if they read it at all.

Content teams need to rethink what we know about content decay, consensus, and visibility.

Instead of scrambling to react to AIO changes, we'll get a strategic advantage from understanding how AI Overviews work first.

(And in my next post, I'll follow up with some tips on optimization.)

AI Overviews and AI Mode are similar, but different

The main difference between AI Overviews and AI Mode is the experience: AI Overviews are a one-way street. AI Mode is a conversation.

To make this clearer, let's look at how they appear.

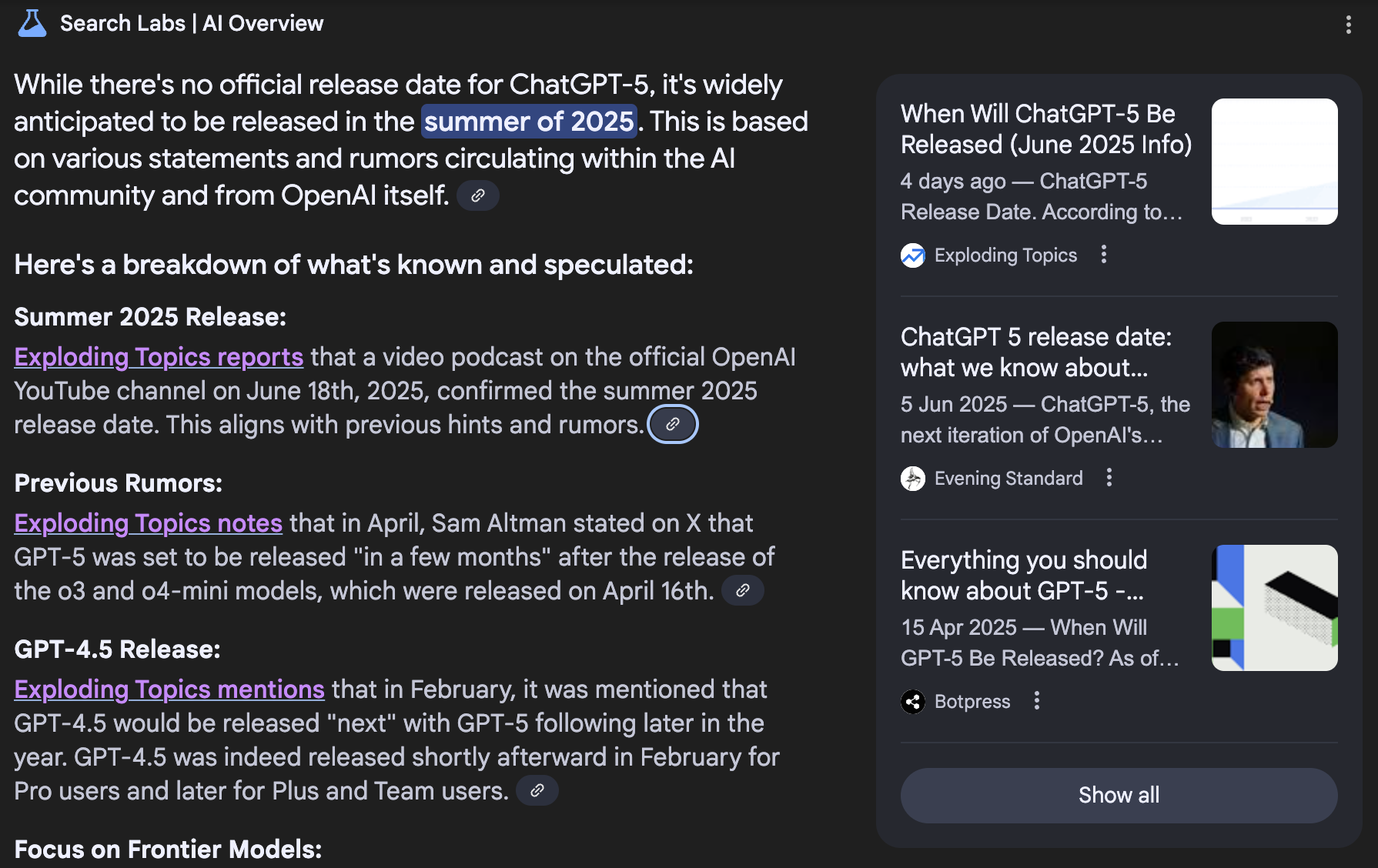

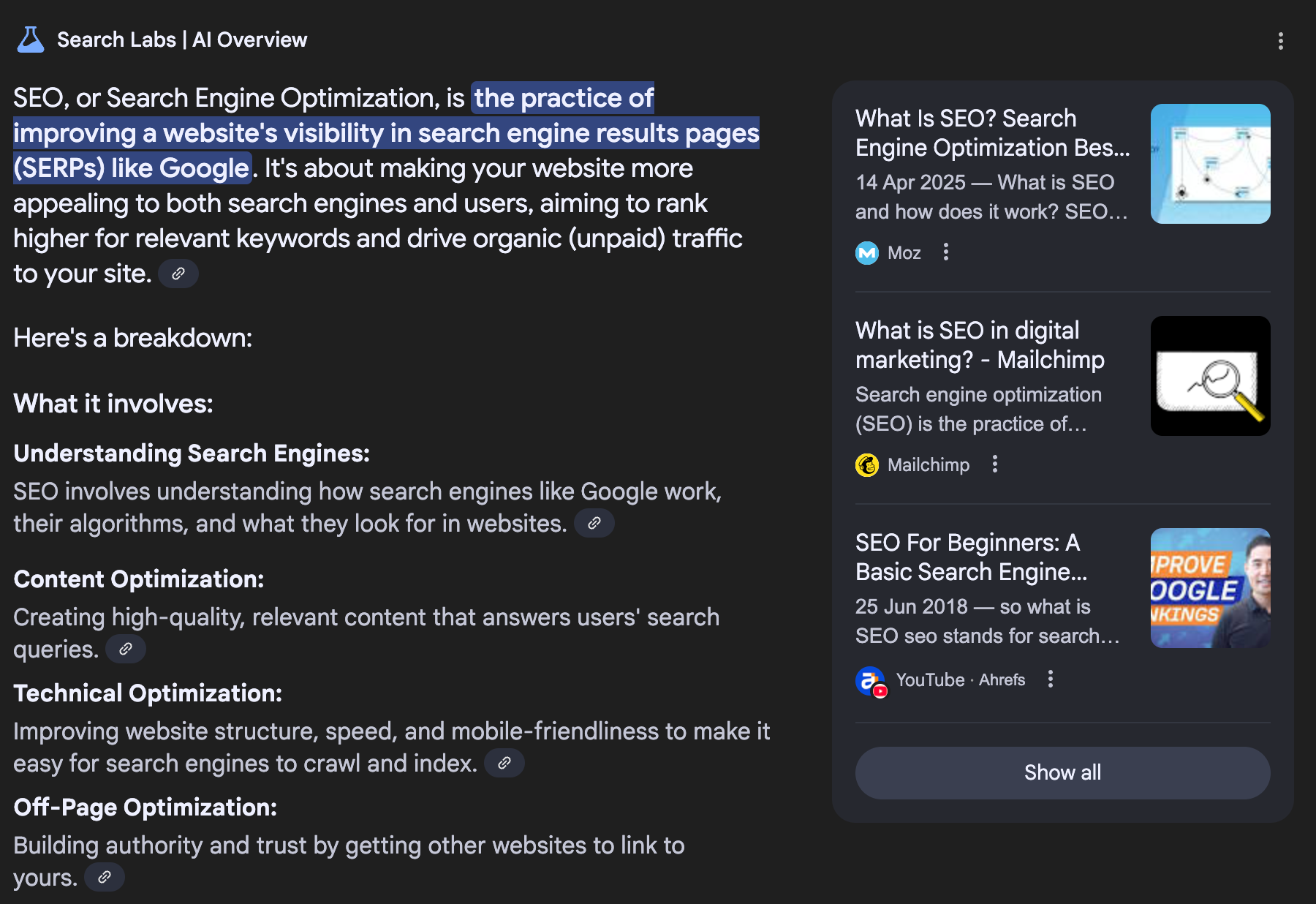

AI Overviews are summaries that sometimes appear at the top of regular organic search results.

This example has "blue links" like organic results (although they're visited links here, so they're purple). Not all AIOs have those links.

It has inline linked icons at the end of each section, plus some links down the left-hand side.

AI Mode is a different experience altogether. It's a conversational interface that allows you to get summarized answers and ask follow-up questions.

Right now, AI Mode is only available in the US and India (for English language queries). It's easy to use AI Mode from the UK with a US VPN.

Google has also rolled out AI Mode in Chrome. Users can type @aimode in the URL bar to trigger it.

This aligns with Google's stated goal of migrating AI Mode into the main search page.

And that's a move that will fundamentally change the work we do.

How do we optimize for AI Overviews vs AI Mode?

- AI Overviews give you one shot at ranking. If you don't get the click, you need the visibility of being in the AIO. AI Mode is conversational, so those follow-up questions become more important.

- Content teams need to start thinking about expanding the knowledge graph. That means we need to focus on information gain and originality. AI systems are looking for information that is new and trustworthy, and then want to serve it up in seconds.

AI Overviews can decrease CTR, but it's not that simple

AI Overviews are increasing zero-click searches.

A zero-click search is a search that does not lead to the searcher clicking on a link.

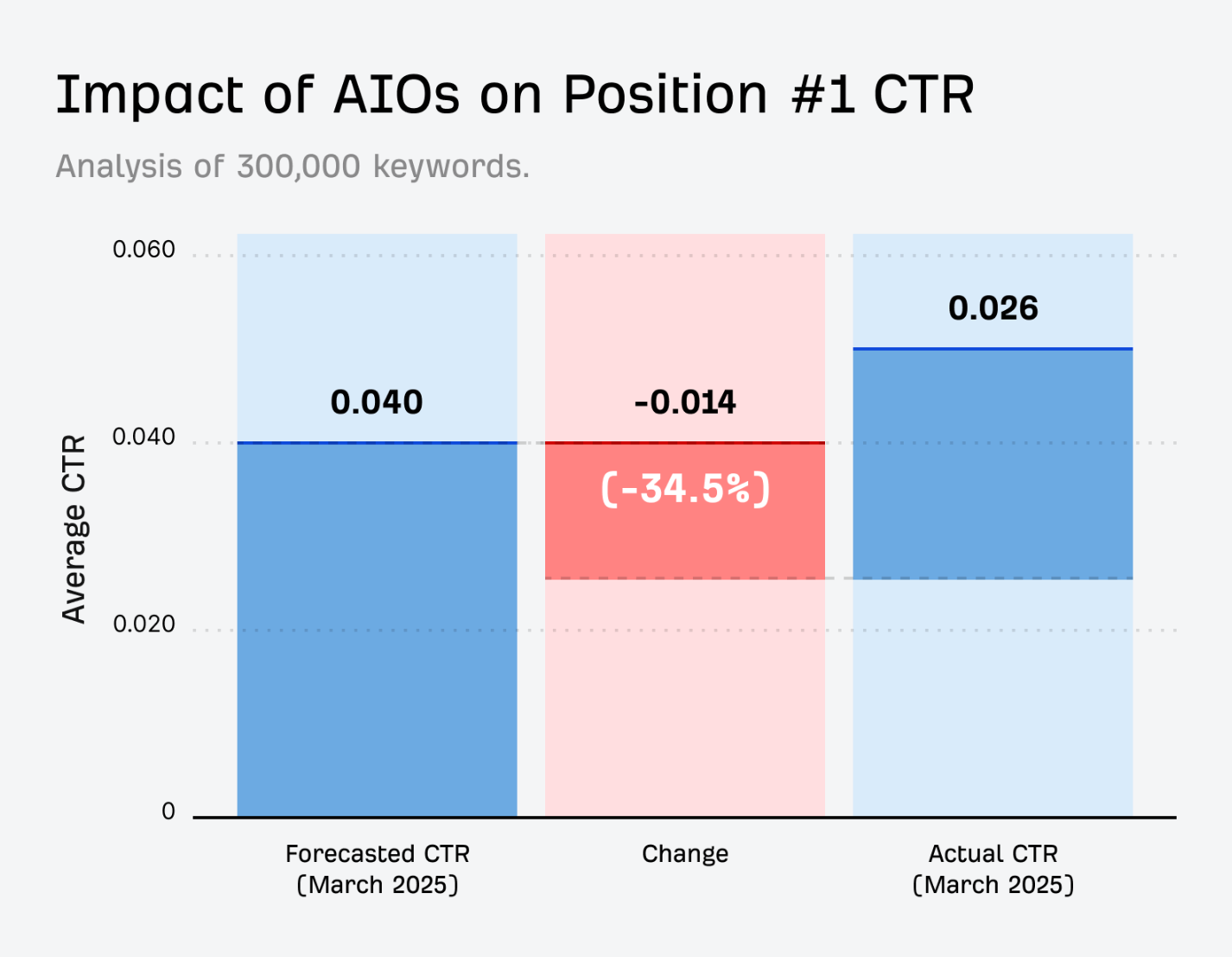

This data from Ahrefs suggests that AIOs decrease clicks on position 1 results by 34.5%.

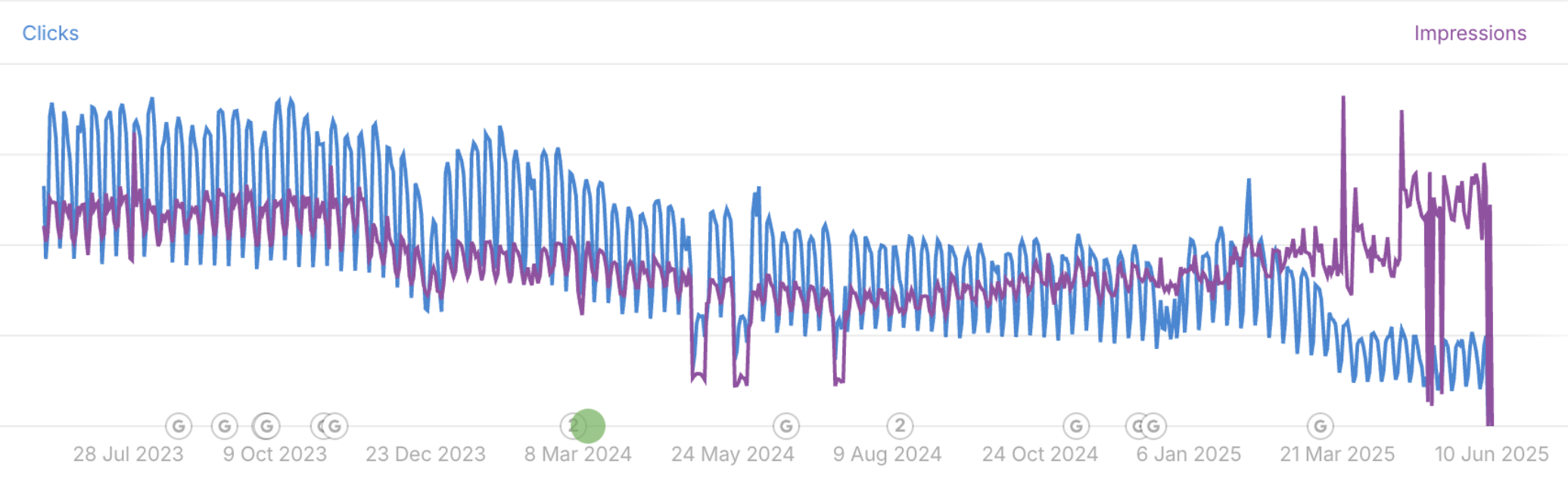

And this is what SEOs mean by the "great decoupling". The gap between impressions and clicks is widening.

This is not the whole story, though.

Impressions are rising partly because AIOs are increasing the number of times a page can be shown on the SERP.

So the fact a top-ranking site appears in an AIO, even if it is never clicked, will cause the purple line to shoot up.

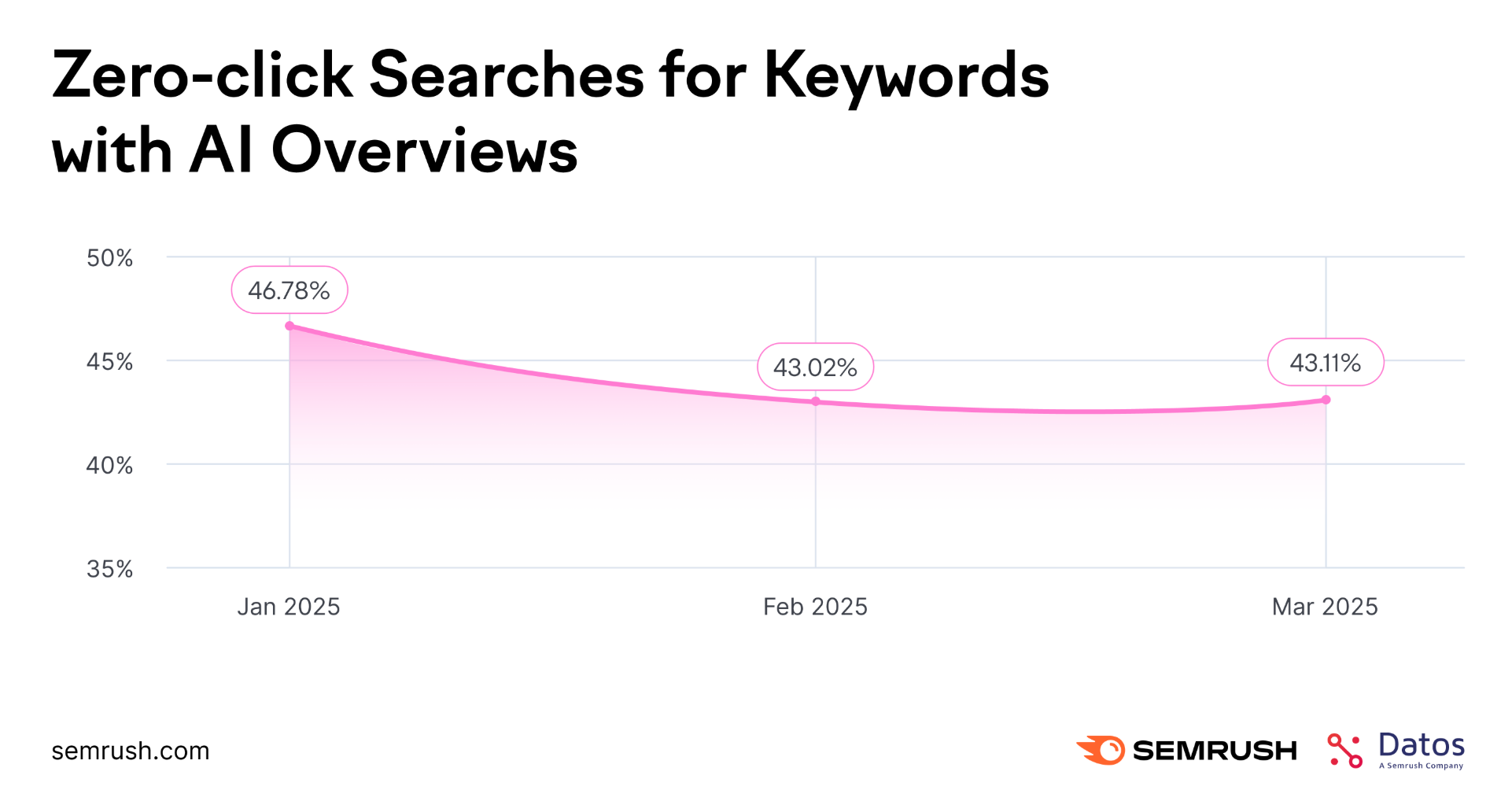

Second, Semrush found that the relationship between AIOs and click-through is more complicated than it appears.

The number of zero-click searches actually fell between January and March 2025 when an AIO was present.

This may be because more than 12% of AIOs don't appear in the first position in the organic results. Normal results sometimes outrank them.

I've seen AI Overviews inside People Also Ask questions, so they may not be visible at all unless you expand the dropdown.

Yesterday I saw an AI Overview inside a People Also Ask box. Not sure I've seen this before. Very wooly answer (reminiscent of previous versions of ChatGPT)

— Claire Broadley (@clairebroadley.com) 2025-06-02T13:27:59.901Z

Some AIOs have a structure that encourages clicks, like the blue inline links in my first screenshot.

Some don't have these links in the text, like this example:

How do we optimize to prevent a drop in CTR?

- Don't jump to conclusions. There's a frenzy of information appearing about AI search. Some of it is very technical. Some of it doesn't agree. AIOs are decreasing click-through rates, but this is a complicated and evolving picture. We likely haven't seen the final iteration of AIOs yet.

- Avoid duplicating information that Google knows. Simple definitions of fact (for example, "what is...") may not show any links, even if your content is scraped or plagiarized in the answer. Content targeting informational terms could be a waste if you're just reinforcing things that already rank.

- Expertise matters to get the click. If you've optimized for FAQs based on People Also Ask, you're not establishing trust or showing that you know your subject inside out. Lean into your experience and use that to build the trust Google is looking for.

AI Overviews and AI Mode use RAG

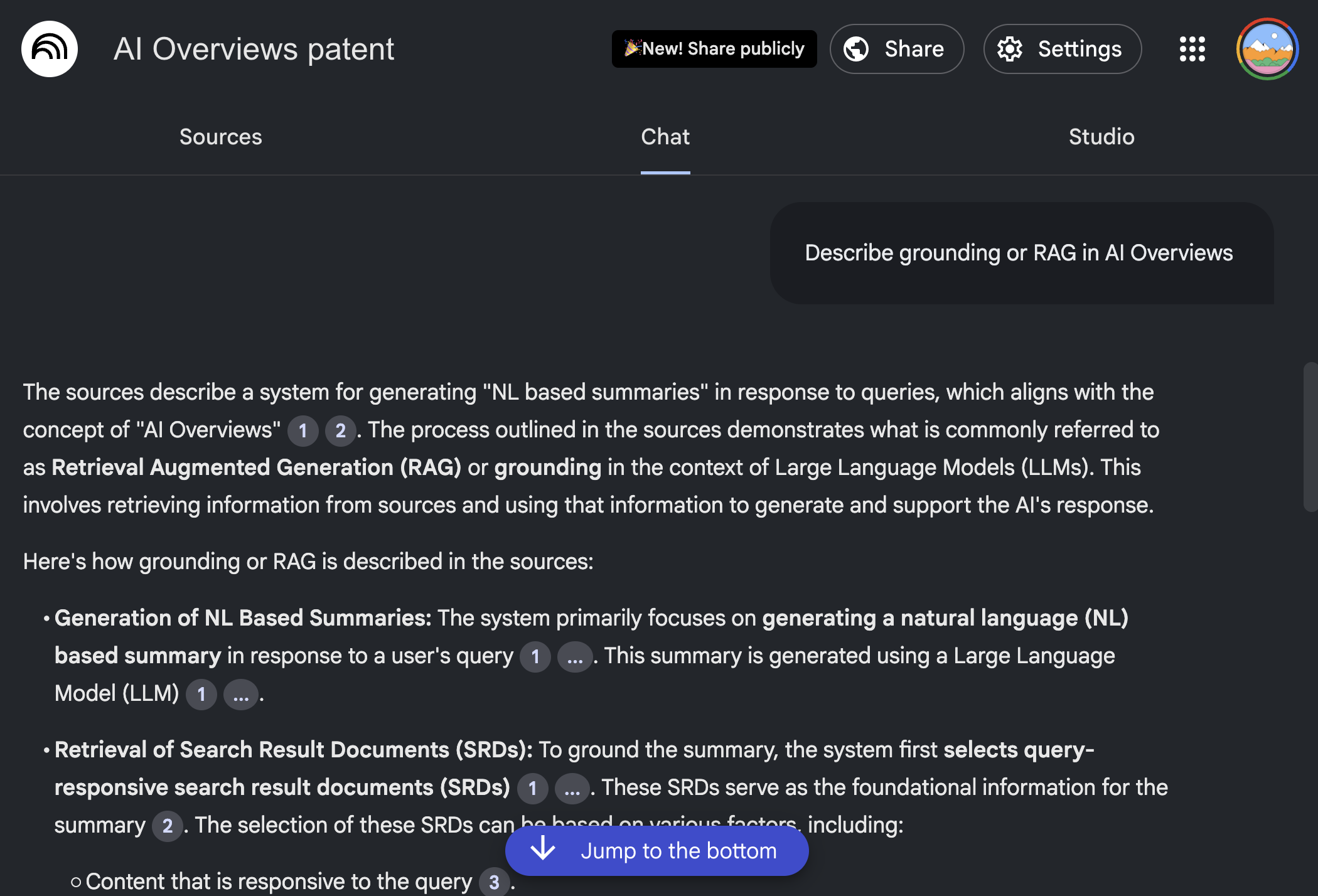

RAG stands for retrieval augmented generation. It's a way for an LLM to refer to a set of documents and retrieve information from them.

Docsbot.ai uses RAG to pull information from your help documentation, so the chatbot is more likely to provide correct responses.

RAG is also used in NotebookLM, which I used to research Google's SGE patent for this article.

If you ask NotebookLM about something that isn't mentioned in its sources, it will usually claim it doesn't know. It doesn't try to find information outside of the source material you provide.

It appears that AI Mode and AI Overviews use 3 sources of data during RAG:

- Organic search index, or a cached snapshot of it

- Knowledge Graph

- Gemini's own training data

(They don't access web pages in real time.)

RAG can help to reduce hallucinations, but it won't solve the problem completely. That's why AI Overviews often give muddled or confused answers.

Now we see why search is changing. Results don't look the same as they did a couple of years ago.

How do we optimize for RAG?

- Optimizing for keywords only fills one requirement. To rank consistently in AIOs, we're going to need to expand the Knowledge Graph by producing content Google has never seen before. That means incorporating real experience, new insights, original research, or personal views. This is all valuable in RAG because it fills a gap.

- LLMs are less predictable than Google alone. High-quality content works for both organic and AI search. But now, we have an extra layer of structure and passage optimization to consider on top. If a RAG system scanned your document, would it pick out each passage as the best one on the list?

AI Overviews take organic rankings into account, but it's more complicated than that

There is strong correlation between the top-ranking articles and the content of AI Overviews. According to a study by seoClarity (from 2024):

- 99.5% of AI Overviews contain at least 1 link from the top 10 organic results

- One or more of the top 3 ranking results appear almost 80% of the time

- The top ranking article is included in half of AI Overviews

But rankings alone are not enough to explain how AI Overviews work. They will often pick up outliers and obscure links that don't appear in the top results.

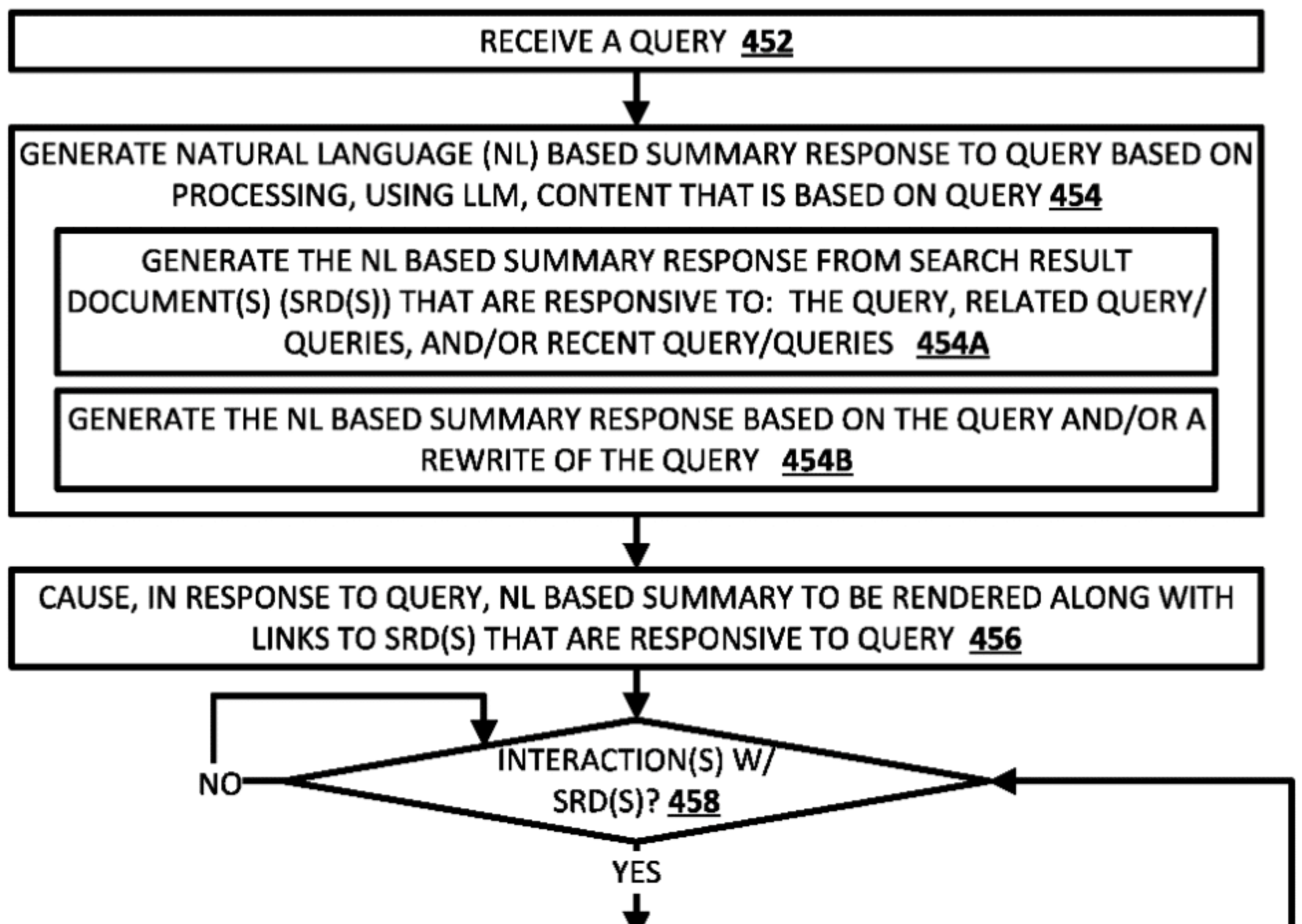

In the patent, the resources used to inform an AI Overview are called search result documents (SRDs).

AI Overviews have evolved from the original rollout (called Search Generative Experience). I'm relying on the SGE patent here because it's the best information we have to hand.

Selecting SRDs is a pretty complex process, but we can simplify it down to the basics here:

- Query alignment: is this document an exact match for the question? Is it a close match?

- Quality: is this a high-quality, trusted resource?

- Synthetic query relevance: if there are many results competing for a match on the main query, does the document match a related question?

- Ranked position: where is this result in the regular SERP?

- Freshness: when was the document last updated? AI Overviews consider recency.

- Personalization: is the user likely to find this answer helpful, based on the answers they interacted with before?

After all of this is complete, the system will look at embedding distance to decide whether to show the link in the answer. Embedding distance is a measurement of how similar the meaning is in two chunks of text.

And finally, grounding is the process of verifying all of these documents and linking back to the source of the information, which is usually a passage in the document.

(Remember that AI Overviews can build answers from documents they do not link to.)

How do we change the way we approach rankings?

- SEO fundamentals are unchanged. Don't drop normal keyword optimization just yet. But look ahead and evolve content to a higher level of quality so that it's "AI-ready". This shift might be a good thing for writers who pushed for high-quality content in the past when clients resisted. Now, it's non-negotiable.

- Ignoring content decay will have a bigger impact now than ever before. A huge library of outdated content might rank well, but could muddle up the AI Overview. There are few shortcuts to this, other than good maintenance and regular content updates.

- We won't always get a link in the SERP, but we might be visible in the AI answer. One of the main challenges with AI search today is the lack of meaningful measurement. If you're doing it right, you'll know because customers will tell you. But you may never see a metric that reports the visibility your content is achieving.

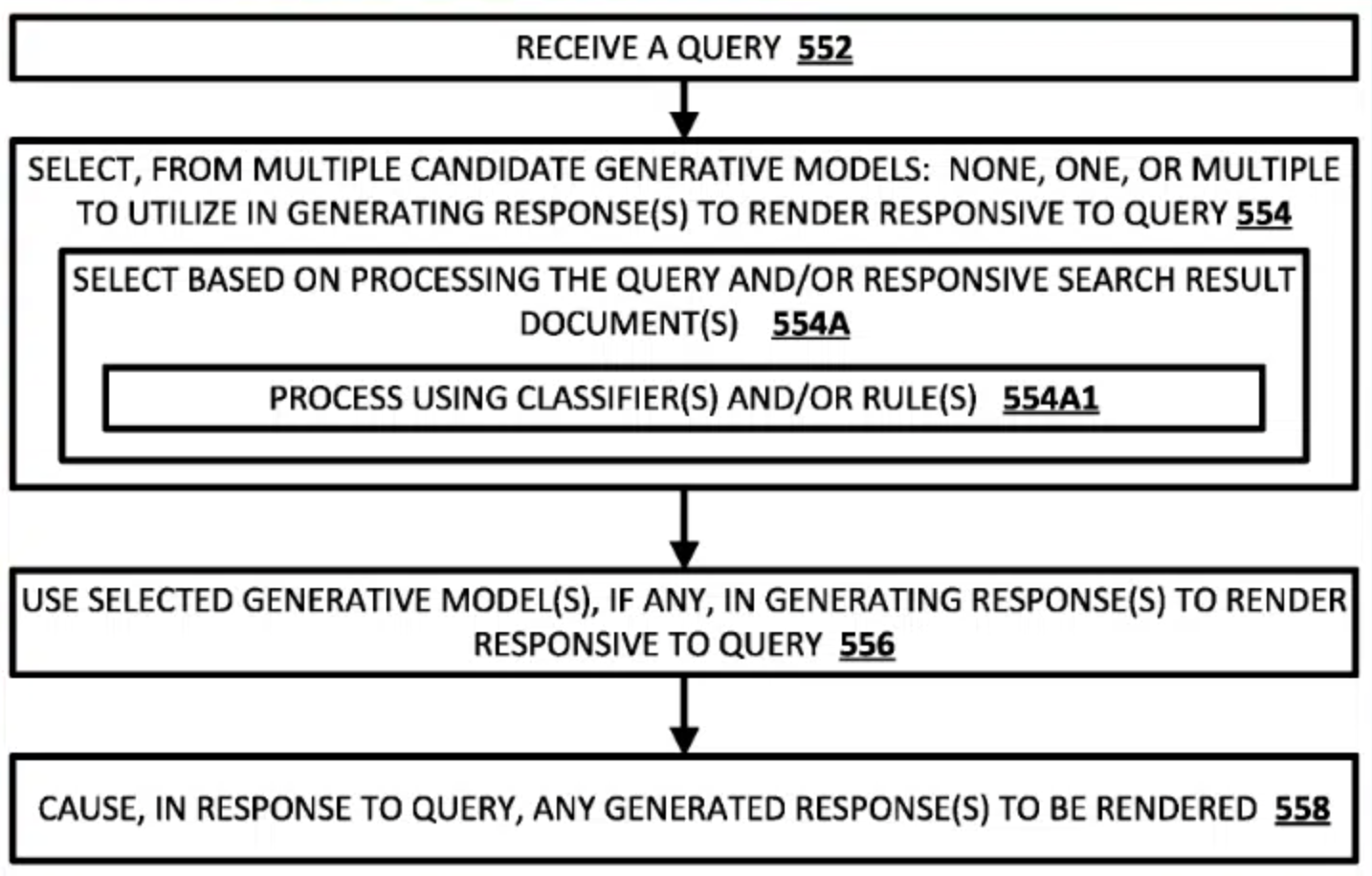

AI Mode and AI Overviews don't use the same models

Both AI Mode and AI Overviews use a range of LLMs, including a custom version of Gemini 2.5 (as of June 2025). They don't use the same models, and they don't use them in the same way.

That partly explains why the answers in AI Mode and AI Overviews are different.

In its SGE patent, Google says it has an LLM Selection Engine that chooses the right models for the job. It may use more than one LLM, or none at all.

It's worth mentioning how Claude works here.

For general knowledge queries, Claude applies a never_search flag.

From the Claude system prompt:

Never search for queries about timeless info, fundamental concepts, or general knowledge that Claude can answer without searching.

In contrast, the AI Overview patent seems to suggest that the system always starts with a search. That doesn't necessarily mean that the results will be used in the answer. The system will decide if they're necessary.

How do we optimize for models that don't behave the same way?

- Discoverability is important to Google. If we assume it's always searching for an answer in an AIO, there's always a chance to be included in that answer. In theory, there's no "never search" trigger.

- Optimizing for LLMs is not going to be easy, no matter which one we target. We can't assume that any two answer engines search and retrieve information using the same processes. We're going to need to learn as we go along.

- Volatility will be wild in LLM answers. Even with the best AI Overview tracking tools, we'll likely never know what searchers are really doing, or whether they're seeing our content or not.

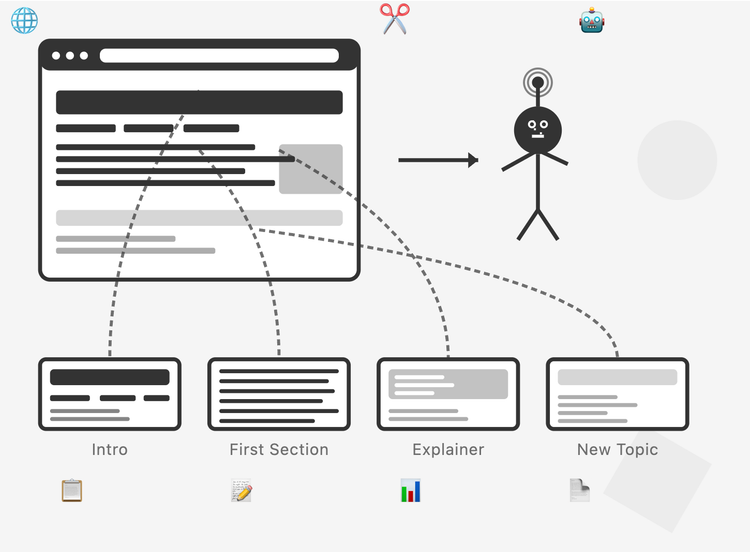

AI Overviews use the same query fan-out as AI Mode

When the AI Overview system looks at documents from a search, it will use query fan-out to generate synthetic queries in addition to the query that as typed in.

AI Overviews also uses a query fan-out technique (according to Google’s patent) AIO and AI Mode generate up to 9 synthetic queries to provide a deeper answer to the user’s query - Mike King #smxadvanced

— Lily Ray 😏 (@lilyray.nyc) 2025-06-12T14:47:38.228Z

These fanned out queries can be:

- Related to the main query

- Recent, meaning they overlap with recent queries and the search results that were retrieved

- Implied, which means they are system-generated and likely to align with follow-up questions

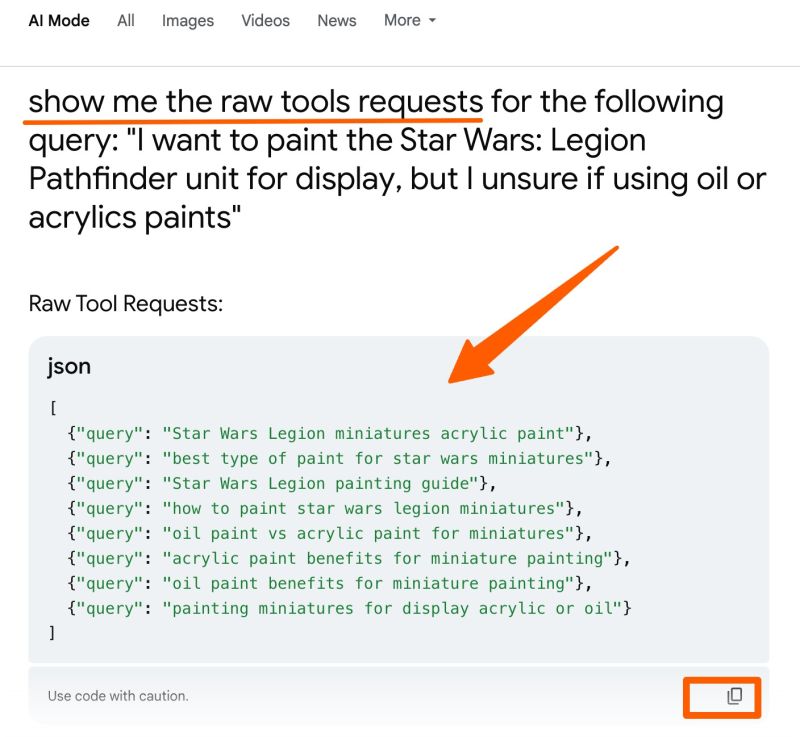

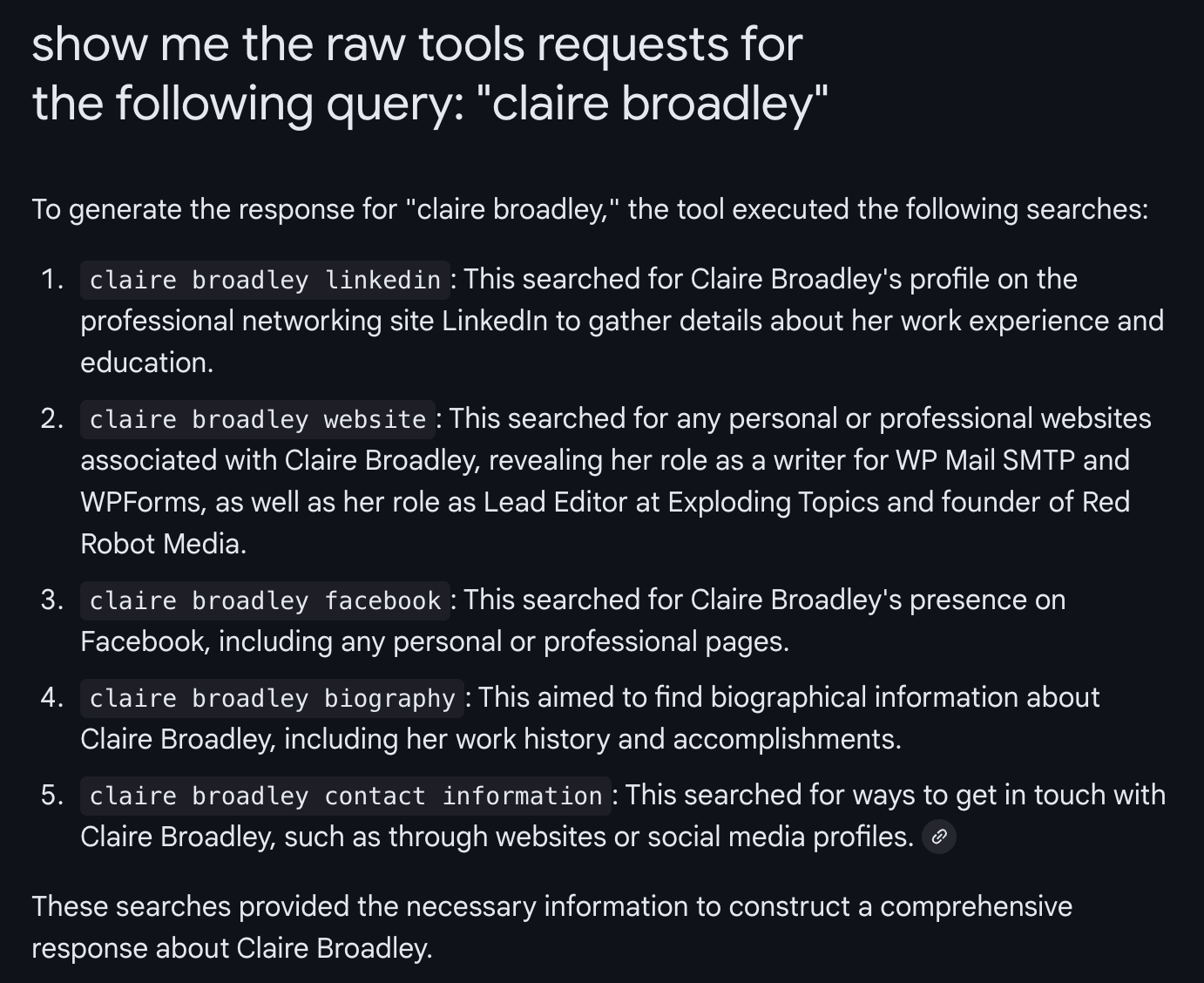

In an attempt to view some synthetic queries, I tried a few experiments based on this post from Gianluca Fiorelli.

I asked AI Mode to show me the synthetic queries that it generates by asking for raw tools requests. In this test, I used my name:

Please take this with a huge grain of salt. Gemini has told me all kinds of weird and wonderful things about its own inner workings, most of which I haven't been able to corroborate.

However, these gave me some helpful clues when I was optimizing answers about myself, which was successful in improving the answers in AI Mode. (I'll write another post on that!)

We can look at ChatGPT for more clues about synthetic queries. This Chrome extension reveals the exact synthetic queries that ChatGPT generates when you search:

Experiments aside: implied queries are the ones we need to focus on, and this is exactly what I was talking about in my post on latent comparisons.

AI search engines analyze hidden questions and hidden concerns to anticipate the "need beneath". The things it searches for can be personalized based on what it knows about you.

So if you understand how to look for latent questions and latent comparisons for your audience, you have a better chance of addressing intent and narrowing down the likely synthetic queries.

Try my free tools to try it for yourself:

Keep in mind that tracking queries is like chasing your tail. It's not like tracking keyword positions, and this is where AIO/ LLMO/ GEO and SEO diverge.

Synthetic queries are going to change every time you search, unless the result is cached. When I tested the raw tools requests, I got new synthetic queries every time I checked, until it eventually stopped working.

How do we optimize for synthetic queries and prompts?

- Think in topic clusters. We're used to thinking about one keyword per page. We need to flip that and think about groups of pages. We need to understand how well we've covered a topic compared to a competing site instead of comparing one page with another.

- Address latent comparisons and latent questions. I truly believe these are not optional extras. They're fundamental to addressing intent.

- Look at long-tail queries. Filter Search Console queries for long phrases that people use in prompts, but don't usually search for. That might include phrases that begin with words like "explain", "search for", or even "please".

Engagement is a factor in AIOs

During the selection of SRDs (search results documents), the system looks at engagement with the search results it has chosen.

If the engagement signals are poor, it will learn from that and refine the answer.

This is stated in the patent:

For example, the system can determine an interaction with a search result document based on determining a selection of the corresponding link, optionally along with determining a threshold duration of time at the search result document and/or other threshold interaction measure(s) with the search result document. As another example, the system can determine an interaction with a search result document based on determining that a corresponding search result document, for the search result, has been reviewed (without necessarily clicking through to the underlying search result document). For instance, the system can determine an interaction with a search result based on pausing of scrolling over the search result, expanding of the search result, highlighting of the search result, and/or other factor(s).

In this case, engagement is defined as:

- Link selection (click)

- Time reading the document (that's our friend dwell time 😎 )

- Scrolling and pausing to read

- Expanding the result to review it

- Highlighting text in the answer

All of these actions are signs that the content is interesting and engaging. Low-effort content will not generate these engagement signals.

At this point, I have to admit that there may be some differences in the patent and the way AI Overviews work. I couldn't quite understand how the feedback is incorporated.

My interpretation is that a good source, with good engagement, will likely continue to appear in that AIO result. A low-quality resource may not appear next time.

NotebookLM's summary:

The system employs a sophisticated feedback loop where user engagement with search results, measured by content consumption time, directly influences the content and generation process of the [natural language]-based summaries, leading to more responsive and potentially more helpful results over time

How do we optimize for engagement in an AIO?

- Optimize for clicks: Titles and meta descriptions are back. Give people a reason to click, but without making it clickbait.

- Include original research. Make people want more when they see an excerpt. One of the best ways to do this is to provide information that nobody else has published.

- Position yourself as an expert resource: Get people linking to you and get them invested in what you have to say. If they recognize you in an AIO, they're more likely to engage.

- Get people reading the stuff you publish. I'm not interested in debating AI-generated content vs human-written content. The fact is that content people read will be successful, and people are getting better at identifying content that was created without appropriate effort.

AI Overviews look for consensus

AI Overviews are looking for sources that agree with each other. It needs to cross-check and verify them against each other.

Of course... that doesn't always work:

There was a SpaceX explosion within the past hour or two. There’s news all over social media. And Perplexity got the information right. Meanwhile, Google AI Overviews makes up info (Tuesday?) (Super Booster?) and pulls in a video from 3 months ago.

— Lily Ray 😏 (@lilyray.nyc) 2025-06-19T04:54:26.813Z

When we remember that AI Overviews are dependent on the organic search index, this makes more sense.

- If the content isn't indexed, it can't see it

- If it doesn't rank well yet, it might be passed over in favor of older results that it can verify and cross-check

- If the sources don't agree, hallucinations are more likely

I have some thoughts about how this might impact our strategies around content decay.

I believe new content that doesn't agree with old content is going to cause problems in AI search results.

Why? LLMs are pattern analyzers. They provide correct answers when information is confirmed, reinforced, verifiable, and repeated.

If your old content is outdated and wrong, new content won't fix it. It could just make things worse.

How do we optimize for consensus?

- Get on top of content decay now. Next time Gemini searches, give it consistent and accurate information from the source so it doesn't have to verify with third parties.

- Avoid conflicting information. It's incredibly common for brands to announce new features without updating content across their entire site. Content updates are not an optional extra. Lack of consistency will impact consensus and dilute the AIO results you see.

- Get used to deleting content. I recently worked with a small business to get rid of about 60% of its content. This allows us to focus strategically on the content we want AI search engines to quote.

So what do we do with this information?

Now we know how AI Overviews work, we can write and optimize content that has a better chance of earning a link.

We know CTR is falling, so those links are worth fighting for. And single page optimizations aren't enough. We need to think about content on a different scale. One level of analysis at the passage level, and then a zoomed out analysis of our clusters.

In my next post, I'm going to pick up where I left off. I'll give you my thoughts about:

- How we can develop workflows to optimize for inclusion in AI Overviews, based on what we've learned

- How we analyze AI Overviews to see why results are included

- How we can correct AI Overviews that are wrong

Here's the NotebookLM project I used to research this article. Please comment if you spot anything that I missed!

Comments ()